Hard Drive Encryption

On a totally different encryption tangent, I need to encrypt my hard drives. Kind of ashamed that they aren’t encrypted already… I studied the field of cyber-security. However, for a basic home server it didn’t seem as pertinent to encrypt my drives.

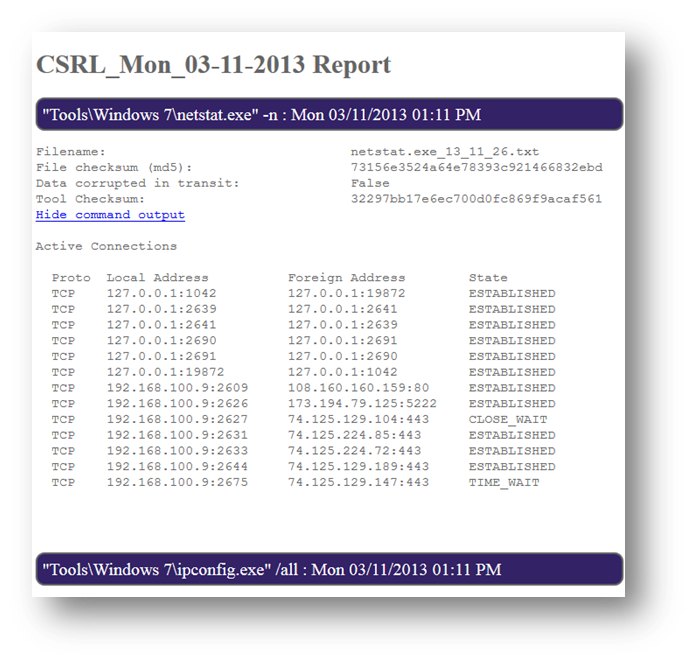

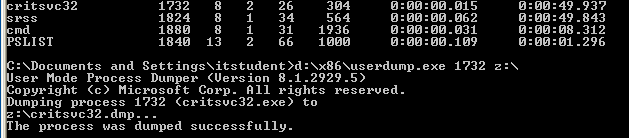

I’m not going crazy or anything with confidential data. However, something really cool with hard drive encryption is that in most cases (strong password utilized, best practices, etc.), if the user is not logged into the computer at the time of seizure, it can be close to impossible (at the moment of writing this) for forensics to decrypt the data. True, there are tools that are part of the FTK toolkit like PRTK that can be used to attempt to decrypt your hard drive. Now correct me if I’m wrong, but if your password is over 12 characters long and includes different characters, numbers, symbols and all that jazz, the decryption attempt will take forever! The investigators are likely to be long gone before anything is returned (the cracking system would also have to be amazing and last just as long).

There are primarily two types of encryption, hardware and software encryption. I prefer the idea of hardware encryption, it encrypts data at the lowest level and tends to be more secure. If someone has access to your environment with a software encryption scheme there is a greater likelihood they will be able to obtain the key through brute force. A simple reference site for an explanation of encryption and the differences can be found here. One uses the computers resources to encrypt while the other relies on the hardware to encrypt data on its own dedicated processor. There really isn’t much difference between performance, problem is not all hard drives come with a dedicated processor for encryption.

My environment consists of three 4 TB hard drives in a RAID5 array that are currently partitioned into two drives. One drive contains Windows 8 and the other is for storage.

So my options, hardware or software encrypt. I’ve already been using the drives for quite some time, I don’t really want to lose the data already stored on the devices. There are some issues I foresee with hardware encryption and a RAID system. Is it even possible with RAID? I have to concern myself with how encryption will affect the stripping and mirroring of data. It all depends on the drive and in my case, its easy, my hard drives don’t even include the capability to hardware encrypt so on to software encryption.

For software encryption, BitLocker and TrueCrypt are two free solutions that I am familiar with and could consider using. I could also look at converting my entire system into a NAS (FreeBSD and FreeNAS can setup a software based RAID and they include encryption capabilities) but… I’ll save that for another day.

BitLocker is already made available on Windows 8 Enterprise and Ultimate, but is it better than TrueCrypt? According to Tomshardware.com, both encryption tools are almost identical in performance. Bottom line, Microsoft’s BitLocker apparently has a few advantages via Intel’s new AES extensions. Despite this, TrueCrypt gives is compatible with non-Windows environments and it allows users to create “secret” partitions. These partitions are totally hidden and are only accessible from the TrueCrypt passphrase screen.

Mmm I think I’ll explore both options. BitLocker is quite easy to setup. From the start screen, type in BitLocker and there it is!

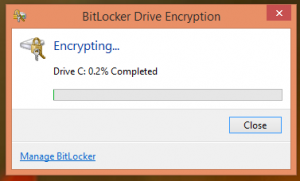

Select to turn on BitLocker and follow the wizard instructions. It’ll take a couple restarts to get things going followed by a long, long wait.

Easy Sauce!

TrueCrypt is slightly different. The install demonstrated was performed on a MacBook Pro with Mavericks installed.

I couldn’t encrypt the working hard drive because it was in use, kind of defeats the purpose of what I was attempting however, I was able to create a hidden/secret partition. So I’m just going with that.

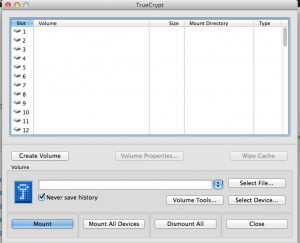

After starting up TrueCrypt, select to “Create Volume.”

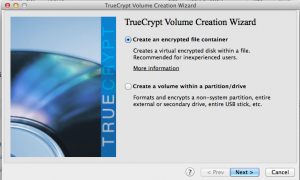

Follow the wizard directions to “Create an encrypted file container.”

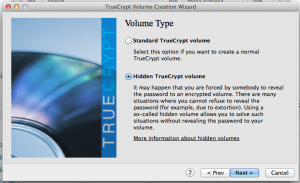

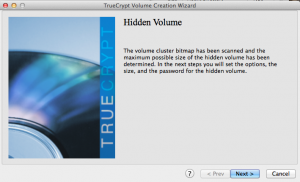

Following, select “Hidden TrueCrypt volume.”

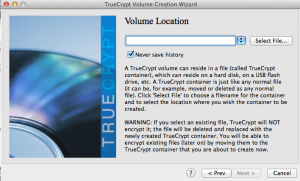

Select a file location for the TrueCrypt volume. This volume will appear as a file which can then be mounted by the TrueCrypt software. Once mounted, it can be accessed just like another filesystem with directory trees, files, etc.

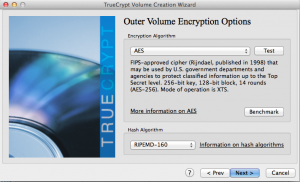

Choose whatever encryption algorithm works for your environment, testing is always a good idea.

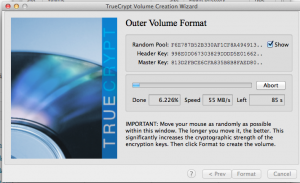

The Outer Volume Format window is slightly peculiar, you just mouse around the window a lot to create a random key sequence.

After selecting, “Format,” the outer volume for the hidden/secret partition will be created. This volume contains the hidden and can act as a decoy. The wizard continues with the hidden volume creation.

It’s basically identical to the earlier, outer volume process.

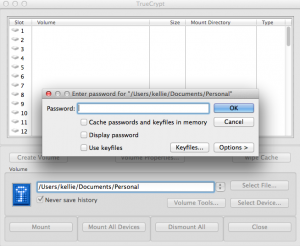

Now to access the two volumes, open TrueCrypt and mount the file you created. You can either enter in the password for the hidden or decoy volume depending on which on you want to access.

So why this outer volume/hidden volume setup? Say, somehow, someone knew you had the TrueCrypt volume and they were forcing you to provide the password. Well, thank goodness you have a decoy! They’ll think they’re getting the goods when really you are only supplying them with decoy files, while the hidden ones lay secretly nestled inside the decoy undetected.

Wow, what a long post but there you have it, the joys of encryption!