Best Practices: Windows Live Analysis

There are some instances where a computer cannot be powered off for an investigation. For these circumstances a live incident response is performed. Another advantage to live investigations is that volatile and non-volatile data can be analyzed. The victim machine is the target of these investigations. These best practices are tool specific to Windows machines; however some of the commands will work in other environments.

1. In performing a digital forensic live incident response investigation it is important to setup the environment correctly. A disk or network drive needs to be created containing executables to the tools that will be used to uncover evidence. Recommended tools include:

- Sysinternals Suite (http://technet.microsoft.com/en-us/sysinternals) – This contains numerous forensic tools.

- FPort (http://www.scanwith.com/Fport_download.htm) – This tool enumerates ports and executables running on the ports.

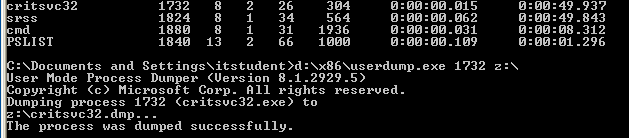

- UserDump (http://www.microsoft.com/en-us/download/details.aspx?id=4060, Contained in User Mode Process Dumper) – Is used to create memory dumps for specific processes.

- NetCat (http://nmap.org/ncat) – Create TCP channels between devices that can be used to pass information.

Warning: The reason only executables are used in an investigation is because software installers will write into memory. If incriminating data was deleted, there is still a chance of uncovering it because it is not removed from disk. The only way to completely get rid of data is to overwrite it. There is a chance that software installers will overwrite such data and therefore are not to be used. This is also why live investigations do not involve any type of information writes to the victim machine’s disk (Jones, Bejtlich and Rose).

2. Since data cannot be written to disk, it is best to map a network drive or use Netcat to transfer information between analyzing machine and the victim machine.

Warning: All files created or tools used need to include a checksum for validation against fraud. A checksum is basically the value of a file hash. If one character in the code or file is changed, the hash will produce a different checksum. This helps validate content. A specific application version will have a unique checksum different from all other versions of the software. A good tool to use to create checksums is File Checksum Integrity Verifier (http://support.microsoft.com/kb/841290).

3. The first step in the investigation is to retrieve the time and date on the victim machine. It is helpful to have this information when an investigation is carried over multiple devices. Time and date should be compared to a trustworthy server.

Warning: This is very important for time sensitive evidence to be presented in a case (Jones, Bejtlich and Rose).

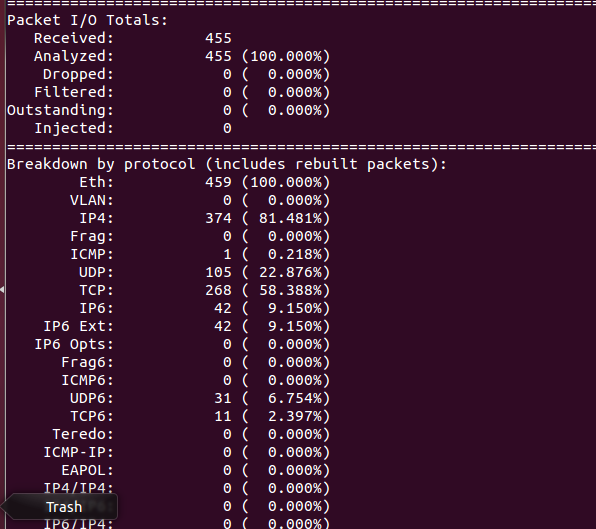

4. Current network connections are to be analyzed next to see if there are any unusual connections established to the computer or ports listening. Netstat –an, is a native command that can be used to see these connections. This command also shows all open ports.

Warning: Look for established connections that are not authorized or that are communicating with unfamiliar IP addresses. Ports higher than 515, are not ports normally opened by the operating system and should be flagged as suspicious (Jones, Bejtlich and Rose).

5. FPort is used to see the executables running on open ports. Unknown processes accessing a port should be flagged as suspicious and analyzed.

6. On machines older than Windows 2003, NetBIOS were used to label a machine instead of the IP address in a connection record found in the event log. In order to validate the machines identity as unique, the command nbtstat –c can be used.

Warning: Hackers could change the name of BIOS, perform an attack and then change it back to another name. The logs would then show the changed name and not the current, leading investigators to a dead end. This is why it is important to check for a unique NetBIOS name (Jones, Bejtlich and Rose).

7. It is also important to check the user currently logged into a machine remotely and locally. The Sysinternal tool, PSLoggedOn can perform a check.

Warning: A non-authorized user may be logged in or hijacking an account remotely (Jones, Bejtlich and Rose).

8. The internal routing table should be examined to ensure a hacker or process has not manipulated the traffic from a device. Netstat –rn can be used to view the routing table. Unfamiliar routes or connections should be flagged as suspicious and used to formulate a hypothesis.

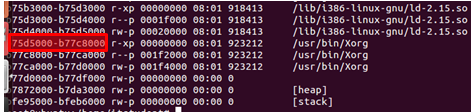

9. The Sysinternals tool, PsList, can be used to view running processes. Process flagged earlier can be viewed. It should be noted that if another process was found started around the same time as the suspicious process, it should also be flagged. The two processes might have been started by the same attack or service.

10. The Sysinternals tool, PsService, is used to look at running services. Services that do not contain descriptions are not obvious services maintained by the operating system and are suspicious.

Warning: Services are used to hide attacking programs and should be analyzed carefully (Jones, Bejtlich and Rose).

11. Scheduled Jobs on a Windows machine can be viewed with the at command. An attacker with the right privileges can schedule malicious jobs to run at designated times.

Warning: A hacker can run a job at odd times, such as 2:00 AM, which would likely go unannounced to most users (Jones, Bejtlich and Rose).

12. Examining opened files may relay information more relevant to an investigation. The Sysinternals tool, PsFile, is used to look at all remotely opened files that cannot be immediately viewed on the victim machine.

13. Process dumps are important in reviewing the actions of a process. The tool, UserDump, can be used to create a dump file of a suspicious process.

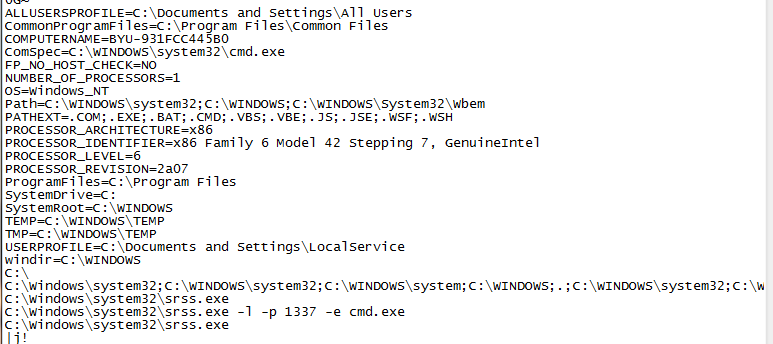

14. Following, the Sysinternal tool strings can be used to pull out any words sentences found in the dump file. This material can be reviewed to gain an understanding of what actions a process or executable performs.

15. After the volatile information is analyzed, non-volatile information can be examined. This includes all logs, incriminating files stored on the system, internet history, stored emails or any other physical file on disk. Manual investigation can be used to review this material.

16. When performing a live response investigation, it is import to be paranoid and research anything that is not obviously a familiar service, process, file or activity.