AztecOO: error: identifier “sswap_” is undefined

Error

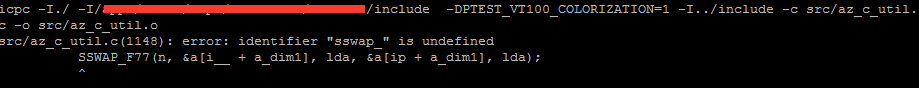

I’ve been trying to compile the AztecOO library outside of Trilinous. In doing so, I pulled the package Aztec source code from the Trilinous source code and opted to write my own Makefile to then compile it. My Makefile included the same settings reported in the Trilinous Makefile.export.AztecOO file.

To prepare this build, I needed to fetch the AztecOO_config.h file from a built version of Trilinous and place it in the source directory of the Aztec directory I was compiling (this file is generated upon ‘make’ in Trilinous). With that in place, I had the files I needed to compile Aztec, however, I ran into the following error.

error: identifier "sswap_" is undefined

Solution

SWAP_F77 is a defined module that points to the F77_BLAS_MANGLE module at the top of az_c_util.c. This second module is defined in AztecOO_config.h. All this module does, is add a “_” to the end of the name provided in the parameters and defines it with the provided value. This works when you are using the Fortran Blas library, but I am using the C version. To fix this, I edited all F77 functions in the AztecOO_config.h file to just return “name” instead of “name ## _”.

I also added this include statement to the file that errors src/az_c_util.c which required that I add –I

#include "mkl_blas.h"